I did two major things for this Jitter project:

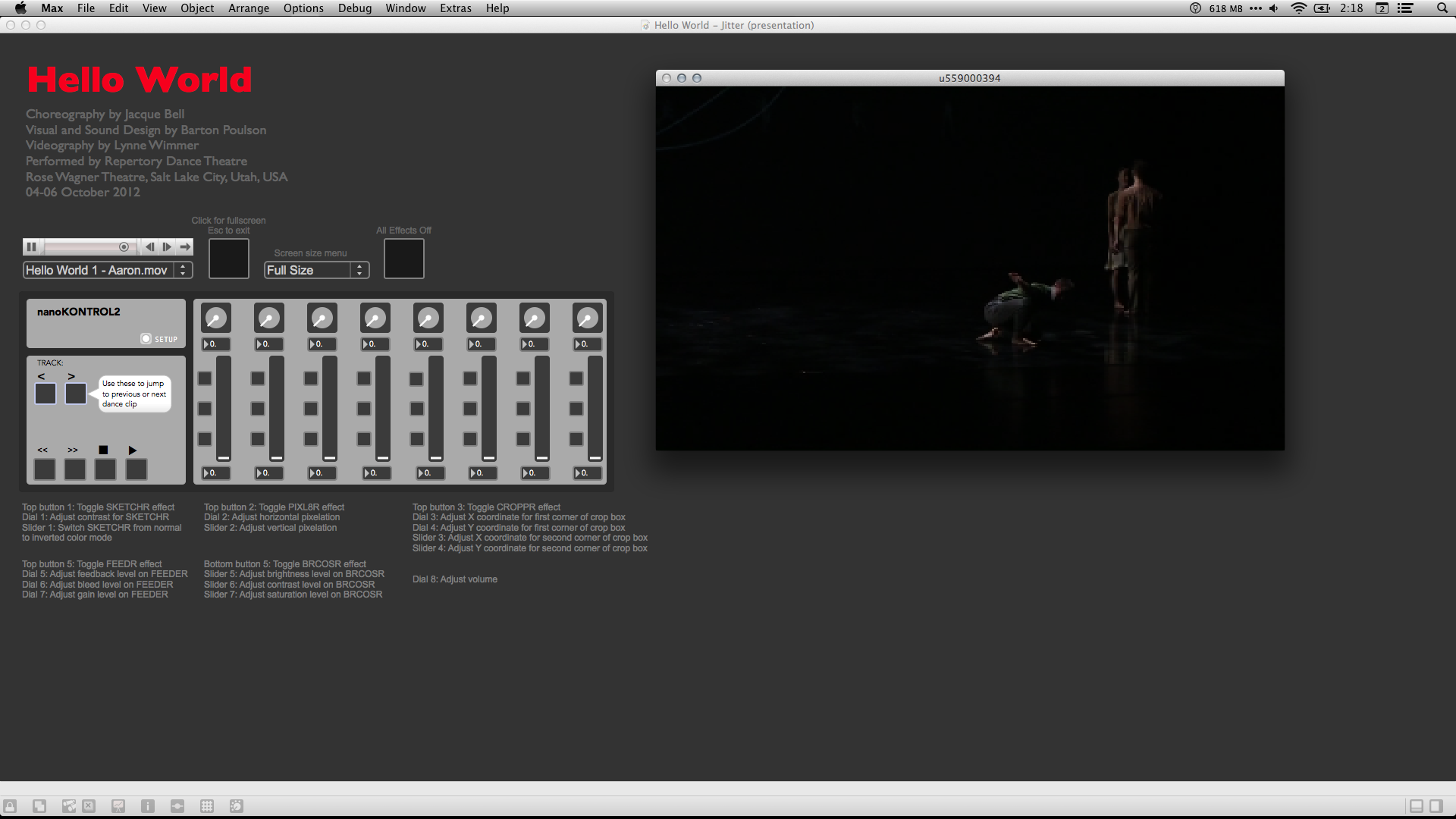

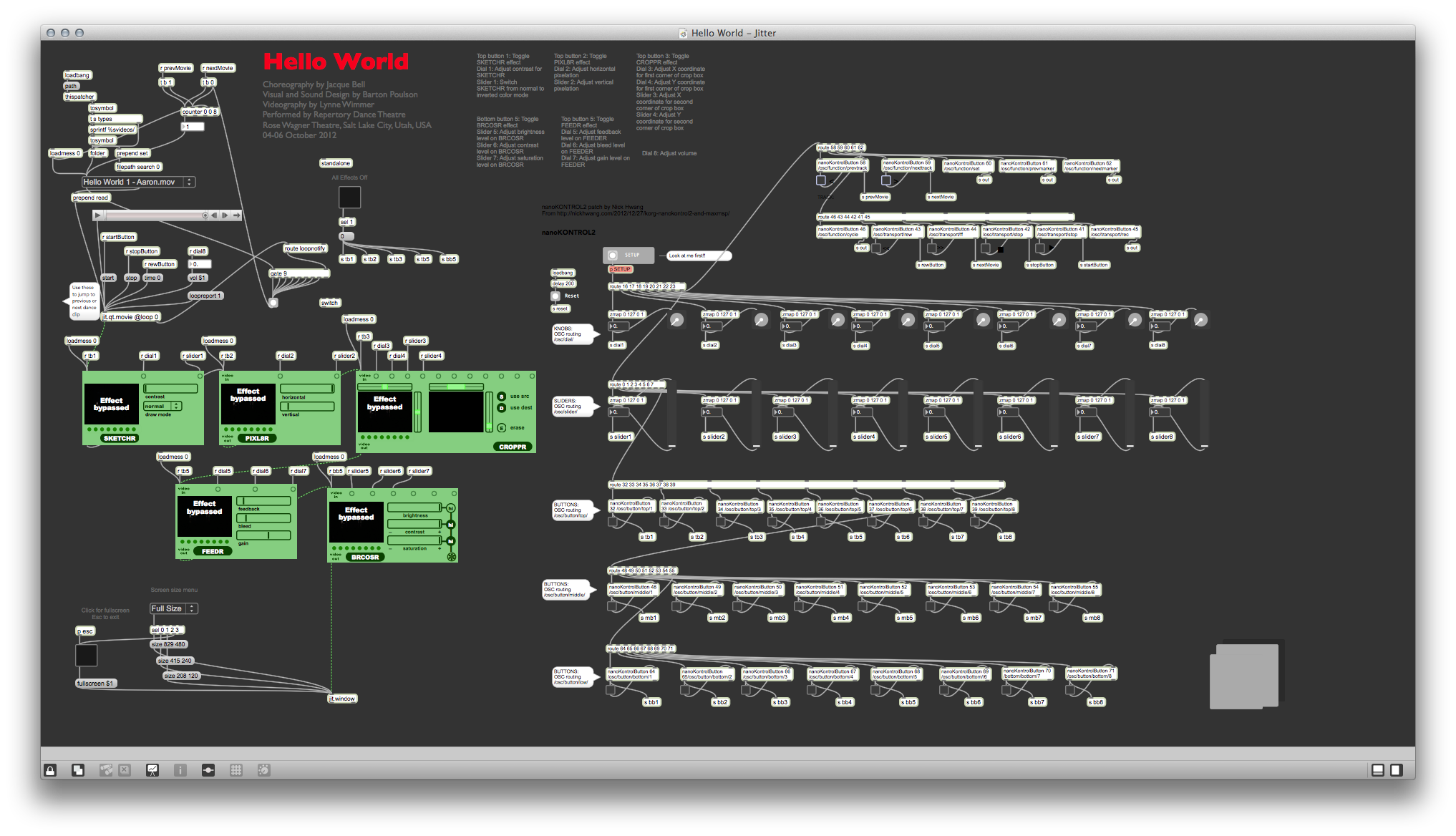

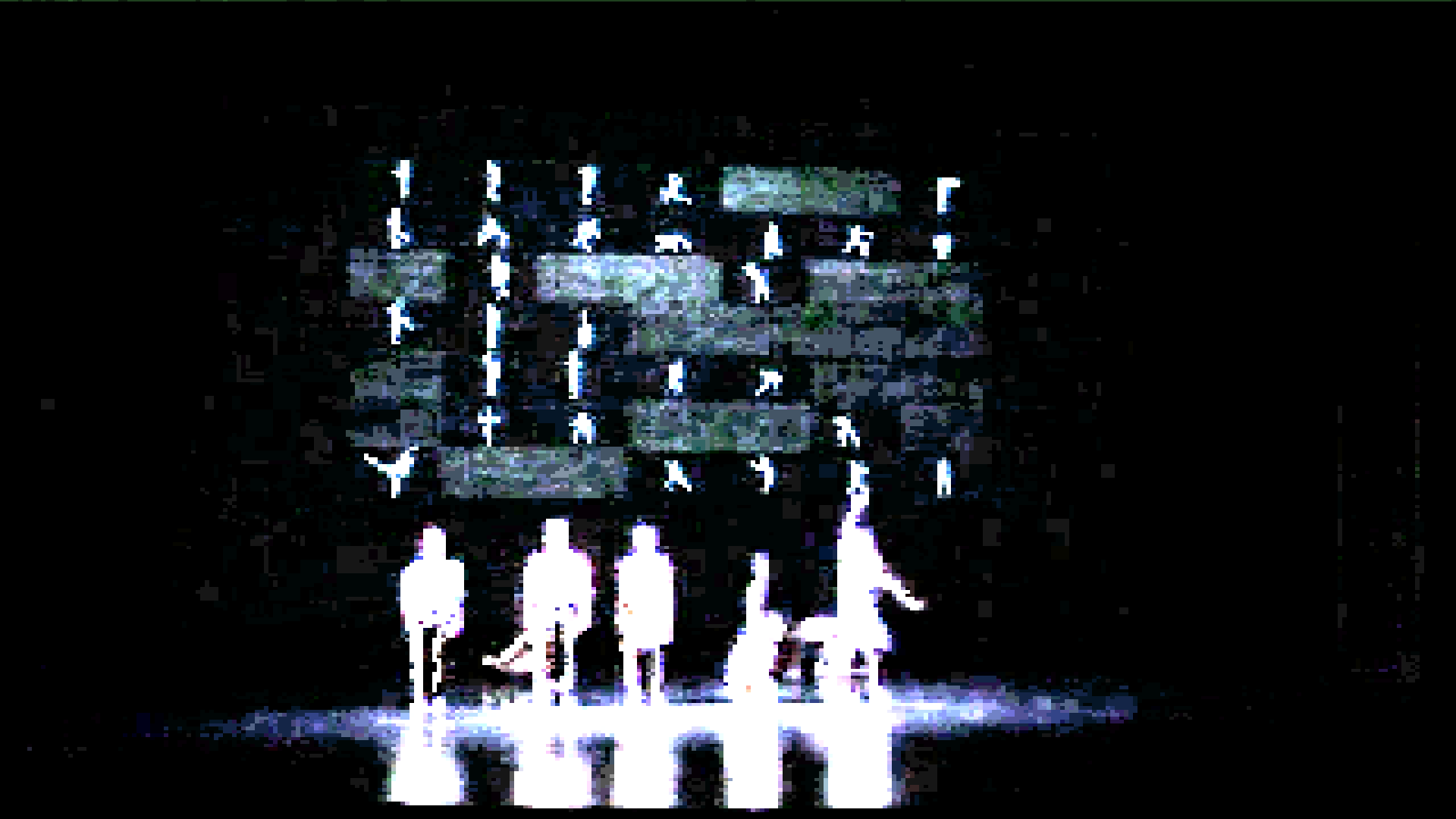

- Worked with several different visual effects within Jitter (as facilitated by the Vizzie modules); and

- Experimented with using a hardware controller – a Korg nanoKONTROL2, in this case – to manipulate video in real time.

Overall, it was a lot of fun and I think there's a lot of potential there. I'll spend the next several months learning ways to work out the kinks in the patch, as not everything worked reliably, and learning how to use other hardware, such as my Kinects, Novation Launchpads, Akai APC40 and 20, KMI Softstep and QuNeo, as well as the projectors, etc. (That's the nice thing about grant money – you can get some excellent gear!)

The major lesson is that it is much, much, much easier to do a lot of this in Max/MSP/Jitter than it is in Processing, which is what I have been using for the last two or three years. The programming is easier, the performance seems to be much smoother, and the hardware integration is way, way easier. (I find it curious, though, that there are hardly any books written about Max/MSP/Jitter, while there are at least a dozen fabulous books about Processing. Go figure.)

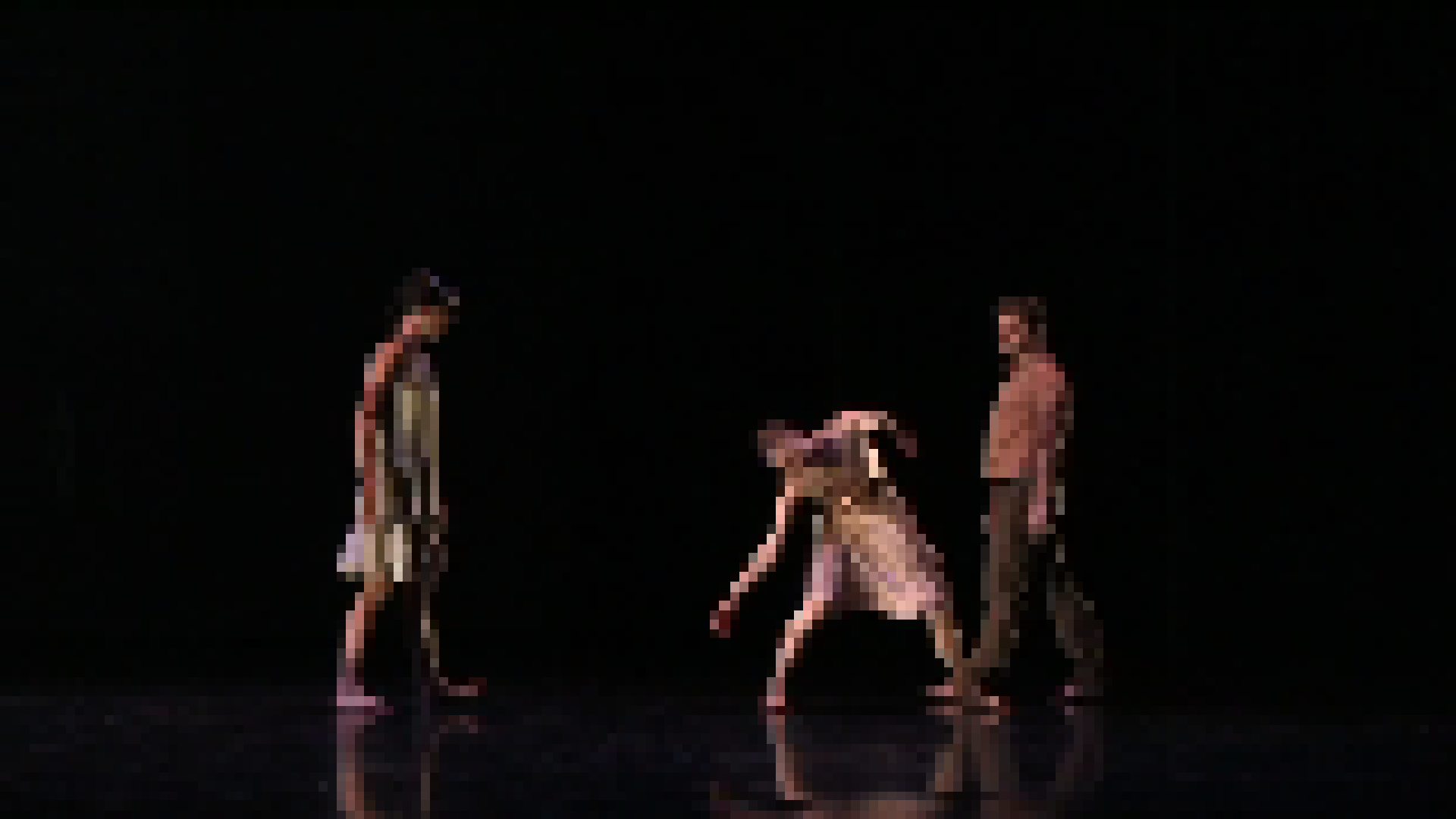

I've included a few still shots at the top of this post and a rather lengthy walk-through of the patch (where not much seems to be working right at the moment...) below.

[youtube=http://youtu.be/NR_mlAQUipM]